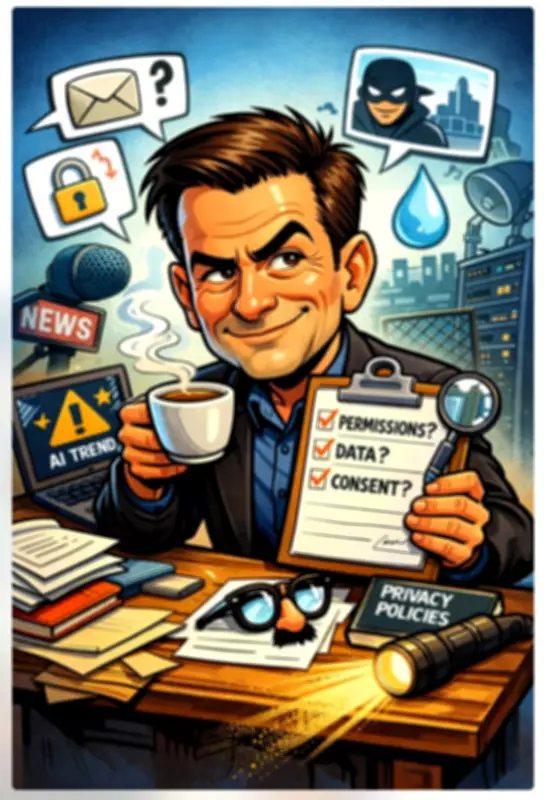

AI Caricature Trend Poses Sinister Risks, Cybersecurity Expert Warns

The viral trend of using artificial intelligence to generate humorous caricatures of oneself or others carries dangerous and often overlooked privacy and security implications, according to a prominent cybersecurity expert. While these AI-powered image transformations have flooded social media platforms with amusing results, experts caution that the underlying technology may be harvesting sensitive biometric data with potentially severe consequences.

Hidden Dangers Behind Digital Amusement

Claudiu Popa, a cybersecurity analyst and privacy advocate, warns that many users are unknowingly surrendering valuable personal information when participating in these AI caricature trends. "What appears to be harmless digital fun actually represents a significant privacy vulnerability," Popa explains. "These applications typically require users to upload multiple photographs of themselves, which are then processed through sophisticated facial recognition algorithms."

The collected data extends far beyond simple image manipulation. According to Popa, these systems can extract detailed biometric markers including facial geometry, skin texture patterns, and even subtle expressions that create a comprehensive digital fingerprint of each user. This information could potentially be repurposed for identity theft, sophisticated phishing attacks, or unauthorized access to biometric-secured systems.

Data Collection Without Clear Consent

Most concerning, according to privacy experts, is the opaque nature of data handling practices employed by many AI caricature applications. Terms of service agreements are often lengthy, complex, and filled with legal jargon that few users thoroughly read or understand. These documents frequently grant broad permissions for data collection, storage, and even sharing with third parties.

"Users essentially trade their biometric identity for momentary entertainment," Popa emphasizes. "The applications rarely provide clear information about how long data is retained, where it is stored, or who might eventually access it. Once your facial biometrics are digitized and stored in a database, you have permanently lost control over that aspect of your identity."

Potential for Malicious Exploitation

The risks extend beyond simple data collection. Security researchers have identified several potential exploitation vectors:

- Identity Fraud: High-quality facial data could enable criminals to bypass biometric authentication systems used by banks, employers, and government agencies.

- Deepfake Creation: The detailed facial mapping obtained through caricature applications provides ideal training data for creating convincing deepfake videos.

- Social Engineering: Detailed knowledge of facial features and expressions could enhance the effectiveness of targeted phishing campaigns.

- Surveillance Matching: Biometric data could potentially be cross-referenced with surveillance footage or other databases without user knowledge or consent.

Protecting Yourself in the AI Era

Popa recommends several precautions for those considering participating in AI-driven trends:

- Read Privacy Policies: However tedious, understanding how your data will be used is essential before uploading personal photographs.

- Limit Photo Uploads: Use older or less detailed photographs that provide less biometric information if you choose to participate.

- Check Application Permissions: Review what system access the application requests and deny unnecessary permissions.

- Consider Offline Alternatives: Some applications offer offline processing options that don't transmit your images to remote servers.

- Delete Data Afterwards: If possible, request that your data be deleted from the application's servers after use.

As artificial intelligence becomes increasingly integrated into everyday digital interactions, experts stress the importance of maintaining critical awareness about what personal information we voluntarily surrender. The AI caricature trend represents just one example of how seemingly innocent technological amusements can carry significant hidden costs to personal privacy and security.

"We're in a transitional period where society is still learning to navigate the implications of widespread AI adoption," Popa concludes. "Entertainment value shouldn't overshadow fundamental privacy considerations. Once biometric data escapes your control, there's no retrieving it."