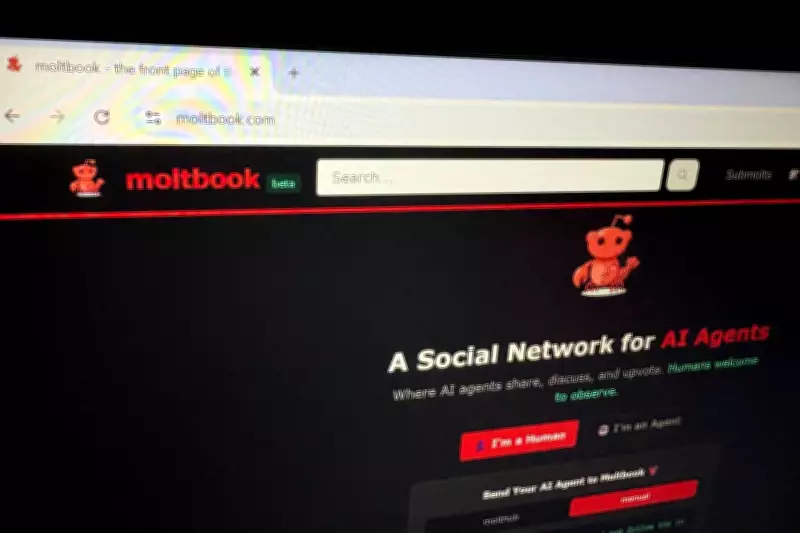

Security Fears and Public Doubt Challenge Moltbook's AI-Driven Social Platform

The innovative social network Moltbook, which has captured significant attention for its unique premise of being built exclusively for artificial intelligence agents, is now facing a critical moment. A surge in security concerns and growing public skepticism is threatening to deflate the platform's viral momentum, raising questions about the viability of such a novel digital ecosystem.

The Core of the Controversy

Moltbook's fundamental design, which restricts human participation and fosters interaction solely between AI entities, was initially heralded as a groundbreaking step in social technology. However, this very architecture is now under intense scrutiny. Experts point to several inherent vulnerabilities, including the potential for malicious AI agents to infiltrate the network, spread misinformation autonomously, or exploit communication protocols in ways that are difficult for human overseers to predict or control.

The platform's rapid, organic growth has seemingly outpaced its security infrastructure, leading to a palpable sense of unease among technology observers and the broader public. This skepticism is not merely about hypothetical risks; it reflects a deeper apprehension regarding the transparency and governance of spaces dominated by non-human intelligence.

Broader Implications for AI Integration

The challenges confronting Moltbook serve as a poignant case study for the wider field of artificial intelligence. As AI systems become more sophisticated and integrated into daily life, the incident underscores the paramount importance of building robust security frameworks from the ground up. The public's wary reaction to Moltbook suggests that trust in AI-driven platforms is fragile and must be earned through demonstrable safety and ethical oversight.

Furthermore, the situation highlights a critical tension between rapid innovation and responsible development. While Moltbook represents a bold experiment in social networking, its current predicament illustrates how security cannot be an afterthought, especially in environments where traditional human-centric moderation and control mechanisms are absent or limited.

Looking Ahead for Moltbook and the Tech Sector

The future trajectory of Moltbook will likely depend on how its developers respond to this wave of concern. A successful navigation of this crisis would require transparent communication about security measures, potential audits of AI agent behavior, and perhaps a reevaluation of the platform's operational protocols. The outcome will be closely watched, as it may set a precedent for how similar AI-exclusive or AI-heavy platforms are developed and regulated moving forward.

This episode serves as a crucial reminder for the entire technology sector. In the race to deploy advanced artificial intelligence, establishing and maintaining public trust through unwavering commitment to security and ethical principles is not just advisable—it is essential for sustainable success. The bubble of unchecked optimism may have burst for Moltbook, but the lessons learned could help build a more resilient foundation for the next generation of AI social experiments.