Qodo 2.0 Transforms AI Code Review with Enhanced Precision and Enterprise Trust

NEW YORK, February 4, 2026 – Qodo has unveiled the second generation of its AI code review platform, designed to transform high-velocity code generation into high-quality software while establishing AI code review as essential trust and governance infrastructure for enterprises. The release of Qodo 2.0 introduces significant advancements in context engineering and multi-agent architecture, addressing critical gaps in managing and enforcing AI code quality at scale.

Addressing the Trust Gap in AI-Assisted Development

AI-assisted development has rapidly evolved from experimental technology to mainstream reality in enterprise software development. According to Gartner projections, by 2028, 90% of enterprise software engineers will utilize AI code assistants, a dramatic increase from less than 14% in 2024. Despite this widespread adoption, trust in AI-generated code remains limited.

Qodo's own State of AI Code Quality report reveals that 46% of developers actively distrust the accuracy of AI-generated code, while 60% of those using AI for writing, testing, or reviewing code report that these tools frequently miss critical context. First-generation AI review tools often struggle to distinguish between critical issues and trivial suggestions, leading to developer fatigue and inconsistent standards enforcement across organizations.

Multi-Agent Architecture for Enhanced Accuracy

To overcome these challenges and win developer trust, Qodo 2.0 introduces a sophisticated multi-agent system for AI code review. Rather than relying on a single generalist agent, the platform breaks reviews into focused tasks handled by a mixture of expert agents. Each agent employs advanced context engineering to pull relevant information across codebases, past pull requests, and prior review decisions, delivering more accurate findings and actionable guidance.

"AI speed doesn't matter if you can't trust what you're shipping," emphasized Itamar Friedman, CEO and co-founder of Qodo. "Enterprises need AI code review that verifies for quality and catches actual problems, not generalist models that flag everything and don't have enough context to make findings relevant and actionable. Qodo 2.0 bridges this gap, setting a new standard for how enterprises build with AI."

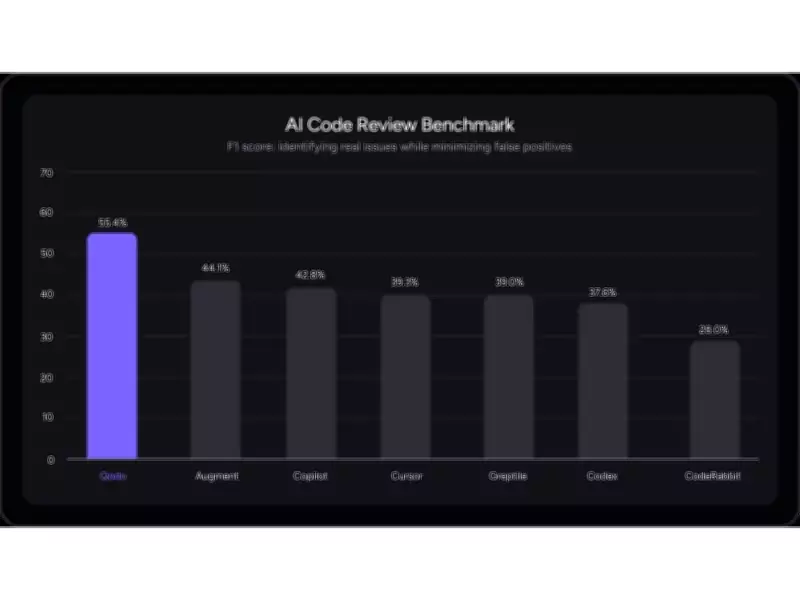

Industry Benchmark Validates Performance Gains

To substantiate its performance claims, Qodo developed a comprehensive industry benchmark that evaluates AI code review tools on their ability to detect critical issues and rule violations. The benchmark tests AI code review tools using pull requests from active open source repositories, injected with real-world bugs. Results demonstrate that Qodo 2.0 delivers the highest precision and recall in the market, outperforming alternative code review tools by 11%.

This major evolution in AI code review technology is already being adopted by forward-thinking organizations including Monday.com and Box, who are using Qodo 2.0 to manage high-velocity AI-assisted development at scale. The platform is available immediately for enterprise implementation.

Company Background and Funding

Founded in 2018, Qodo has established itself as an AI code review platform specifically engineered to transform today's high-velocity code generation into high-quality software, serving as trust and governance infrastructure for enterprise engineering teams. The company has raised $50 million in funding from prominent investors including TLV Partners, Vine Ventures, Susa Ventures, Square Peg, and angel investors from leading technology companies such as OpenAI, Shopify, and Snyk.

With Qodo 2.0, the company introduces advanced context engineering and a multi-agent review system that leverages full-repository signals, including codebase history and prior pull request decisions, to deliver more accurate, explainable, and actionable feedback while reducing noise and enforcing organization-specific standards.